«Goodbye Intuition»!

Tuesday, February 27 at 12 PM – 1:30 PM, in Levinsalen at Norway’s Academy of Music, the improvisation musicians Andrea Neumann (Germany), Morten Qvenild and Ivar Grydeland will play duets along with stubborn computers, developed in collaboration with us. Based on their own logic, the machines engage in the improvised interaction.

The LAB concerts consist of artistic trials and conversations with the audience and form an important part of the project “Goodbye Intuition”. The talks are led by commentator Henrik Frisk. This is the first concert in the series.

The event is free.

About the project:

With Goodbye Intuition (home page) the participants are improvising with “creative” machines. Playing is core in their investigation, and through experience they are pondering the following questions:

- How do we improvise with “creative” machines, how do we listen, how do we play?

- How will improvising within an interactive human-machine domain challenge our roles as improvisers?

- What music emerge from the human-machine improvisatory dialogue?

Goodbye Intuition is about investigating the change in perception of human musicians who improvize together – and their audience – while using real-time computer-generated audio responses, as a representation of an autonomous agent, related to the improvised musical material.

The project aims to investigate this co-operation of humans and autonomous applications ranging from a level of simple chance operations up to implementations of more complex learning algorithms, ultimately aiming for having more sophisticated or possibly even closely humanlike musical interactions from the computer, in order to investigate the influence of a fairly self-consistent, although unpredictable agent on the human musicians, and noting their change of perspective during these musical sessions.

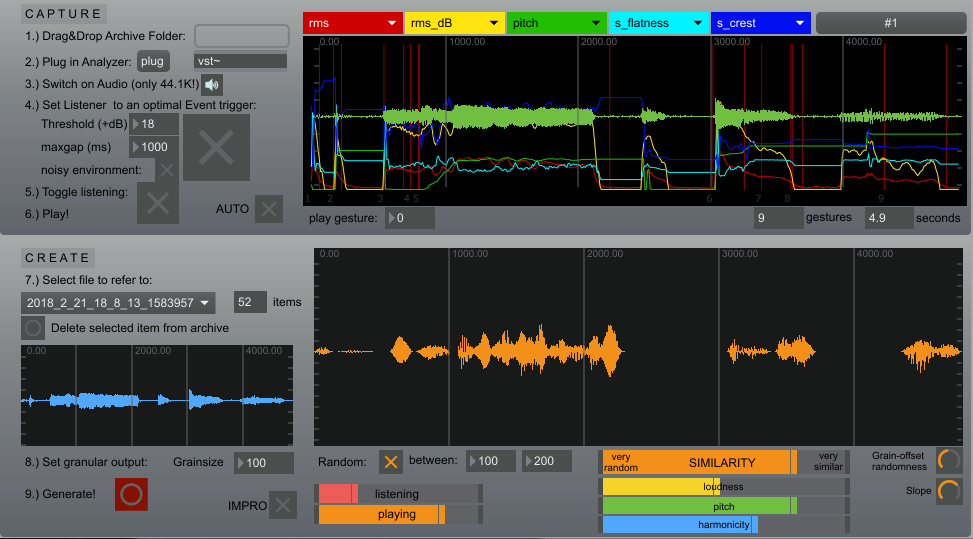

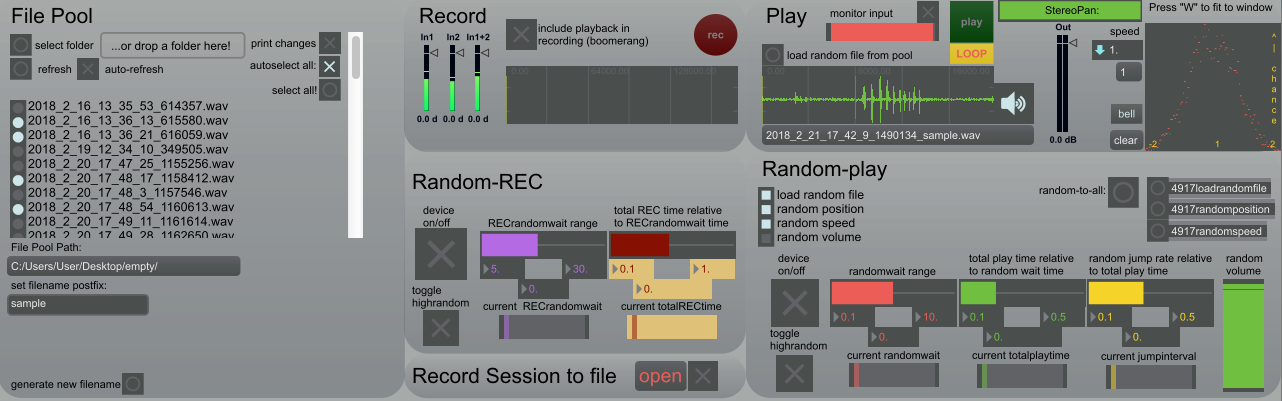

The computer applications are provided to the musicians by Notam. The aim is to provide a set of applications with fairly modular abstractions for the different functions, such as autonomous event detection and in response recording and/or playback, the creation of different archives of the captured material, as well as real-time and offline feature extraction, database collection and supervised learning based on that, and last but not least designing musical behaviour imposed on a variety of output options, such as altered playback, granular reconstruction and MIDI generation.

Screenshot by Bálint Laczkó

Screenshot by Bálint Laczkó

Currently, there are two main applications under development, both focused on archiving the material with different levels of detail, and generate audio output with either altered playback or granular reconstrucion of a given captured material based on several similarity criteria.

The developer of these applications is Bálint Laczkó, a Hungarian composer currently working in Notam in several projects. He is also a live-electronics performer and developer of a number of audio-related applications. He received his master degree in classical and electroacoustic composition in the Liszt Ferenc Academy of Music in Budapest. His main fields of interest in music are chamber/ensemble-music, 3D-audio, material-based sound design, and the application of different machine learning algorithms, (and more generally algorithmic solutions) in composition. A number of his works are available to listen on his SoundCloud page: