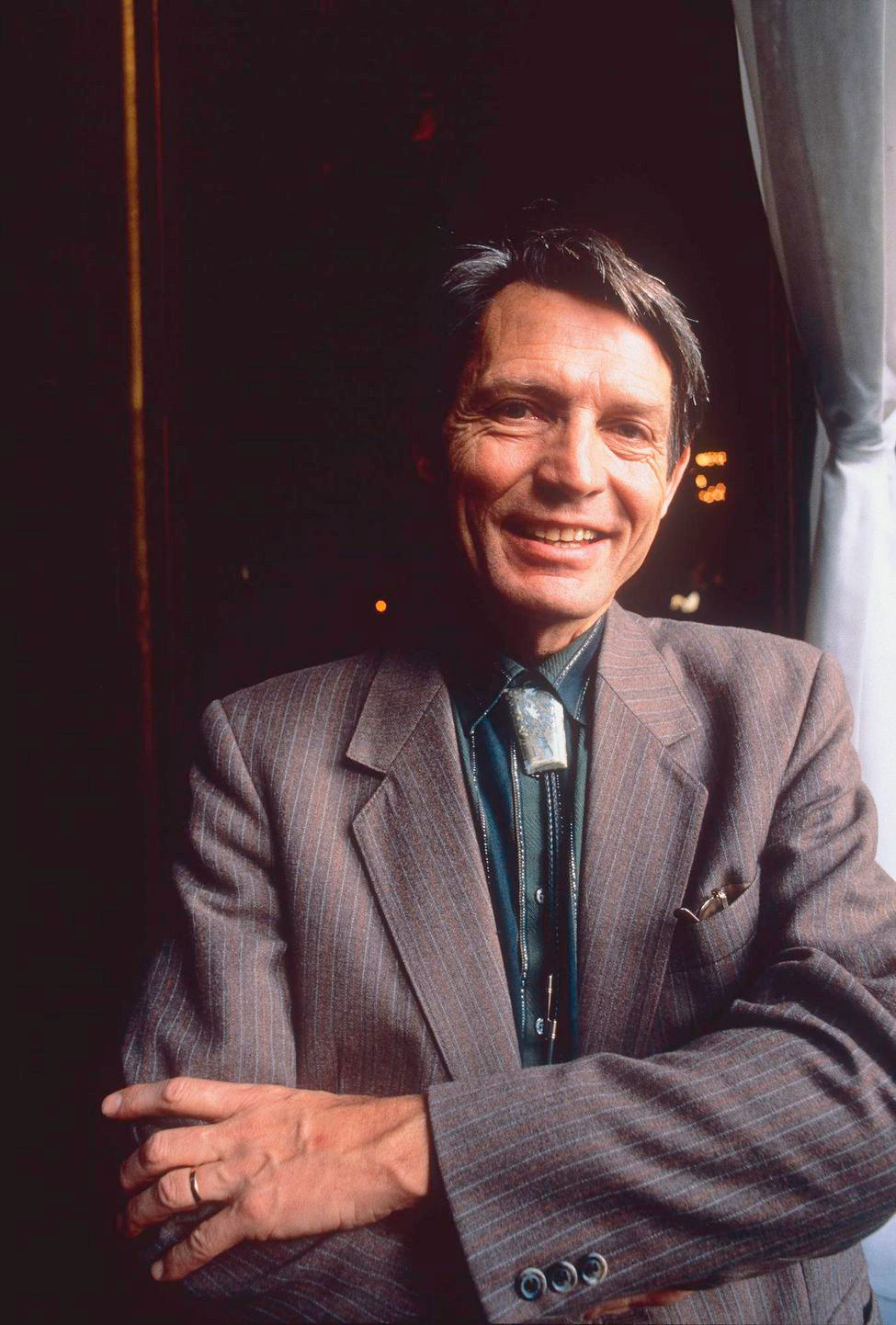

Jean-Claude Risset (1938 – 2016)

The ranks of music technology pioneers have been thinning rapidly the last few years, and on November 21, Jean-Claude Risset passed away. As a musician Risset was not well known in Norway, but his contributions to music research have had such impact that everyone who works with digital sound processing today benefits from his research. It is important to acknowledge his passing in Norway as well.

Jean-Claude Risset had a many-faceted development as pianist, composer and researcher. The last time he visited Norway was June 2014, when he came to record a CD with piano works at eminent sound engineer and producer Jan Erik Kongshaug’s Rainbow studio. Risset had been to Norway several times before, at the invitation of NICEM and Notam, and he was also a contributor to a conference that Notam produced for the Warsaw Autumn Festival in 2010.

I came to know Risset’s music when I was a student at New York University in the late 1980s and Judy Klein introduced me to Sud (1985), one of his most important works. In this piece, Risset mixes digital recordings with synthetic reconstructions of the same sounds in a rich narrative with clearly recognizable historical and cultural themes. Sud is one of the most analyzed works in the computer music repertoire. To me personally, Risset has been a musical inspiration. In my homage to musical predecessors in When Timbre Comes Apart (1992-95), a bell timbre from Risset opens the piece and gives formal structure to the first section.

As a student at Université de Paris in the early 1960s, Risset came across an article by Max Mathews about the programming language Music III. Max Mathews is considered the defining pioneer in computer music, and his work in getting machines to play sound has been heard by anyone who has seen 2001 – A Space Odyssey. The computer HAL (IBM spelled with letters moved forward in the alphabet) sings “Daisy”, and Max Mathews was responsible for the programming.

https://www.youtube.com/watch?v=OuEN5TjYRCE

But where Mathews says that he largely concentrated on making demonstrations and languages for controlling tones, it was Risset who first understood the significance of the spectrum for how the ear recognizes music and sound. In the early 1960s, this detailed understanding was still lacking, although everyone could easily hear how instruments varied in tone attacks and decays. Synthetic reconstruction did not sound particularly natural, so clearly it was something one did not fully understand, and this held back the musical development of the technology.

While at Bell labs, Risset analyzed different tones using a computer, and found that the partials behaved differently; they were not equally strong, and they did not develop in the same tempo. His pioneering study from 1965/66 is an analysis of trumpet tones. As students of computer music, we were introduced to this research when the field was becoming established as a discipline. Risset followed up his research with a large catalogue of instructions for how to recreate different types of sounds in the programming language Music V, which he developed together with Mathews. The catalogue was published by Bell Labs in 1969, one year before Risset participated in Knut Wiggen’s conference Music and Technology in Stockholm. The conference gathered central music technology pioneers for discussions about current key issues in computer music, and Risset?s contribution included a discussion on how to control analog equipment with digital means, which was necessary for the production of more precise results. This was a period of significant technological change, where one began to envision how to compute sound in realtime.

Diagram from Risset’s study of trumpet tones, where the different partials have different trajectories.

At the conference in 1970, Risset also discussed psychoacoustics; how humans typically perceive acoustic phenomena. Whereas acoustics is an objective science, psychoacoustics also considers human factors – our hearing is predisposed in specific ways, we do not perceive all sounds equally well, and cultural and individual factors play a role in this. This has been important in the development of psychoacoustics, where precise control has made systematic investigations and advanced knowledge possible. In technology-based music, this knowledge is important for composers. At the conference in 1970, this position was held not only by Risset but also by Pierre Schaeffer, often credited as the inventor of concrete music, and by the founding director of EMS, Knut Wiggen. Norwegian Arild Boman was also present at the conference. Boman was the first person in Norway to work with computerized sound for musical purposes.

Following the period at Bell labs, Risset became responsible for computer music when the French center Ircam was established in the early 1970s. After his engagement there, he moved to Marseille and took up a post as researcher at CNRS (National Center for Scientific Research). Interestingly, the development of the synthesizer, which actually had its base in computer music, occurred during the period when Risset was at Bell labs. Synthesizer pioneer Robert Moog had a lively correspondence with Risset about modularization and parametrization concepts prior to launching his instruments. Risset’s work was hugely significant for the understanding of musical spectra, and how they can be reconstructed using digital means.

Jøran Rudi

Composer and former director of Notam.